transport maps

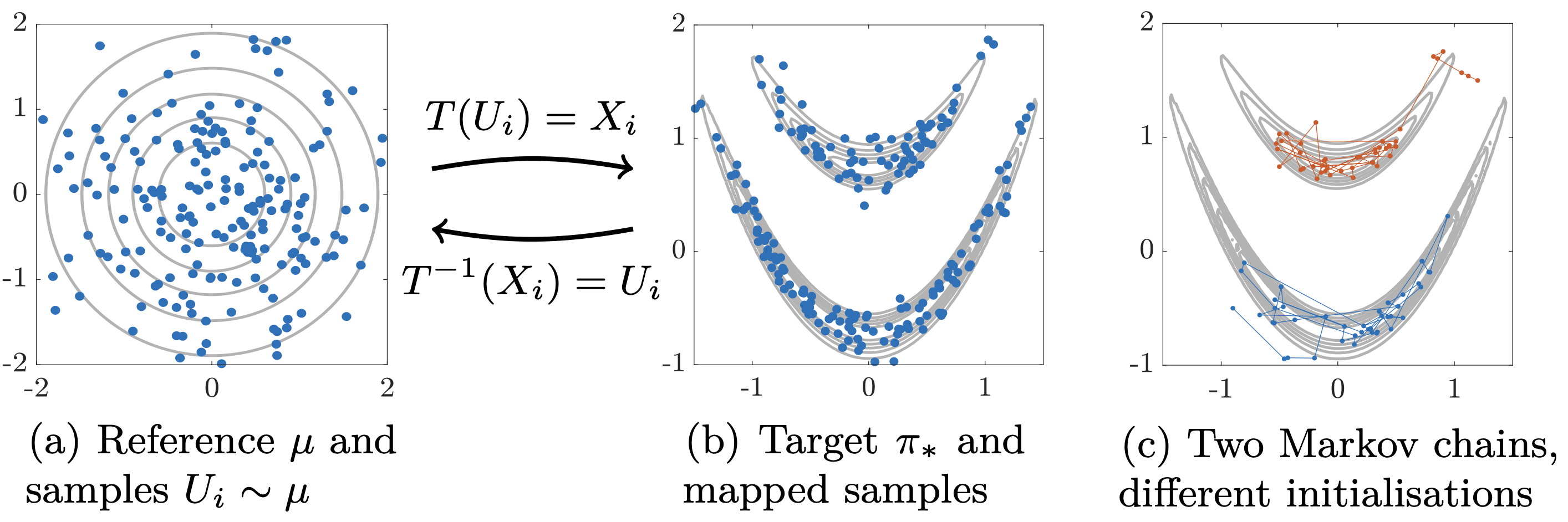

Characterising intractable high-dimensional probability distributions is one of the central tasks in data-science, computational physics, machine learning, and many other disciplines. The start of this dates back to the first general-purpose digital computer ENIAC, on which Metropolis, von Neumann, Ulam and other pioneers invented MCMC to simulate neutron diffusion. MCMC and its variants have become the workhorse for this task since then. The Markov construction makes MCMC methods challenging to parallelise and optimise. Transport maps offer a promising way to overcome MCMC’s limitations using order-preserving transforms that couple complicated target variables with analytically tractable reference variables.

We work on deep tensor-train and polynomial methods for building Knothe-Rosenblatt (KR) rearrangement [1,2]. This has been applied to build generative models with high-dimensional parameters and data [3], importance sampling for simulation data-driven rare events [4], and sequential learning in state-space models [5]. See my presentation at the HDA workshop for some of these works.

We also extended the Stein variational transport maps of Liu and Wang by introducing Newton-type training algorithms and using LIS methods to design the reproducing kernels [6]. We developed optimisation-based transport maps for infinite-dimensional hierarchical inverse problems [7,8].

[1] Cui, T., & Dolgov, S. (2022). Deep composition of tensor trains using squared inverse Rosenblatt transports. Foundations of Computational Mathematics, 22(6), 1863–1922. https://doi.org/10.1007/s10208-021-09537-5

[2] Cui, T., Dolgov, S., & Zahm, O. (2023). Self-reinforced polynomial approximation methods for concentrated probability densities.

[3] Cui, T., Dolgov, S., & Scheichl, R. (2024). Deep importance sampling using tensor-trains with application to a priori and a posteriori rare event estimation. SIAM Journal on Scientific Computing, C1–C29. https://doi.org/10.1137/23M1546981

[4] Cui, T., Dolgov, S., & Zahm, O. (2023). Scalable conditional deep inverse Rosenblatt transports using tensor trains and gradient-based dimension reduction. Journal of Computational Physics, 112103. https://doi.org/10.1016/j.jcp.2023.112103

[5] (missing reference)

[6] Detommaso, G., Cui, T., Marzouk, Y., Spantini, A., & Scheichl, R. (2018). A Stein variational Newton method. Advances in Neural Information Processing Systems, 9169–9179.

[7] Bardsley, J. M., Cui, T., Marzouk, Y. M., & Wang, Z. (2020). Scalable optimization-based sampling on function space. SIAM Journal on Scientific Computing, 42(2), A1317–A1347. https://doi.org/10.1137/19M1245220

[8] Bardsley, J. M., & Cui, T. (2021). Optimization-based Markov chain Monte Carlo methods for nonlinear hierarchical statistical inverse problems. SIAM/ASA Journal on Uncertainty Quantification, 9(1), 29–64. https://doi.org/10.1137/20M1318365